The Carnegie Mellon lab designing the next phase of computer-human interaction.

To try to get a glimpse of the everyday devices we could be using a decade from now, there are worse places to look than inside the Future Interfaces Group (FIG) lab at Carnegie Mellon University.

During a recent visit to Pittsburgh by Engadget, PhD student Gierad Laput put on a smartwatch and touched a Macbook Pro, then an electric drill, then a door knob. The moment his skin pressed against each, the name of the object popped up on an adjacent computer screen. Each item had emitted a unique electromagnetic signal which flowed through Laput's body, to be picked up by the sensor on his watch.

The software essentially knew what Laput was doing in dumb meatspace, without a pricey sensor needing to be embedded (and its batteries recharged) on every object he made contact with.

But more compelling than this one neat device was how the lab has crafted multiple ways to create entire smart environments from a single gadget.

There is 1950s KGB technology that uses lasers to read vibrations. And ultrasound software that parses actions like coughing or typing. And an overclocked accelerometer on another smartwatch which allows it to detect tiny vibrations from analog objects, sensing when a user is sawing wood or helping them tune an acoustic guitar.

Founded in 2014, the research lab is a nimble, fast-prototyping team of four PhD students led by Chris Harrison, an assistant professor of human-computer interaction. Each grad student has a specialty such as computer vision, touch, smart environments or gestures. Academic advances in this field move fast, and tend to be ahead of widespread (and profitable) industry releases by a long shot — VR, touchscreens and voice interfaces all first appeared in the 1960s.

Yet a lot of the FIG's projects die right here in the lab, where every glass office window is saturated with neat bullet points in marker pen, storyboard panels and grids of colorful post-its. Harrison says the typical project length is only six months. Of the hundreds of speculative ideas the lab generates each year, at most 20 are turned into working prototypes, and 5-10 may be published in the research community. Two projects have spun off into funded startups while a handful have been licensed to third parties (the lab receives funding from companies like Google, Qualcomm and Intel).

Combining machine learning with creative applications of sensors, FIG is trying to find the next ways we'll interface with computers beyond our current modes of voice and touch. Key technologies whose interfaces are yet to be standardized include smartwatches, AR/VR, and the internet of things, says Harrison.

A foundational principle at the lab is to create high tech functions at low cost, or with the devices consumers already own. For instance, Karan Ahuja, a computer vision and machine learning specialist who came from Delhi to join the lab, invented an eye tracker for cardboard VR headsets that uses only the front-facing phone camera, using AI to decipher the distorted close-up image. In another experiment, he made software for full body tracking in VR for two people simultaneously, using each person's phone camera to read the other's movements.

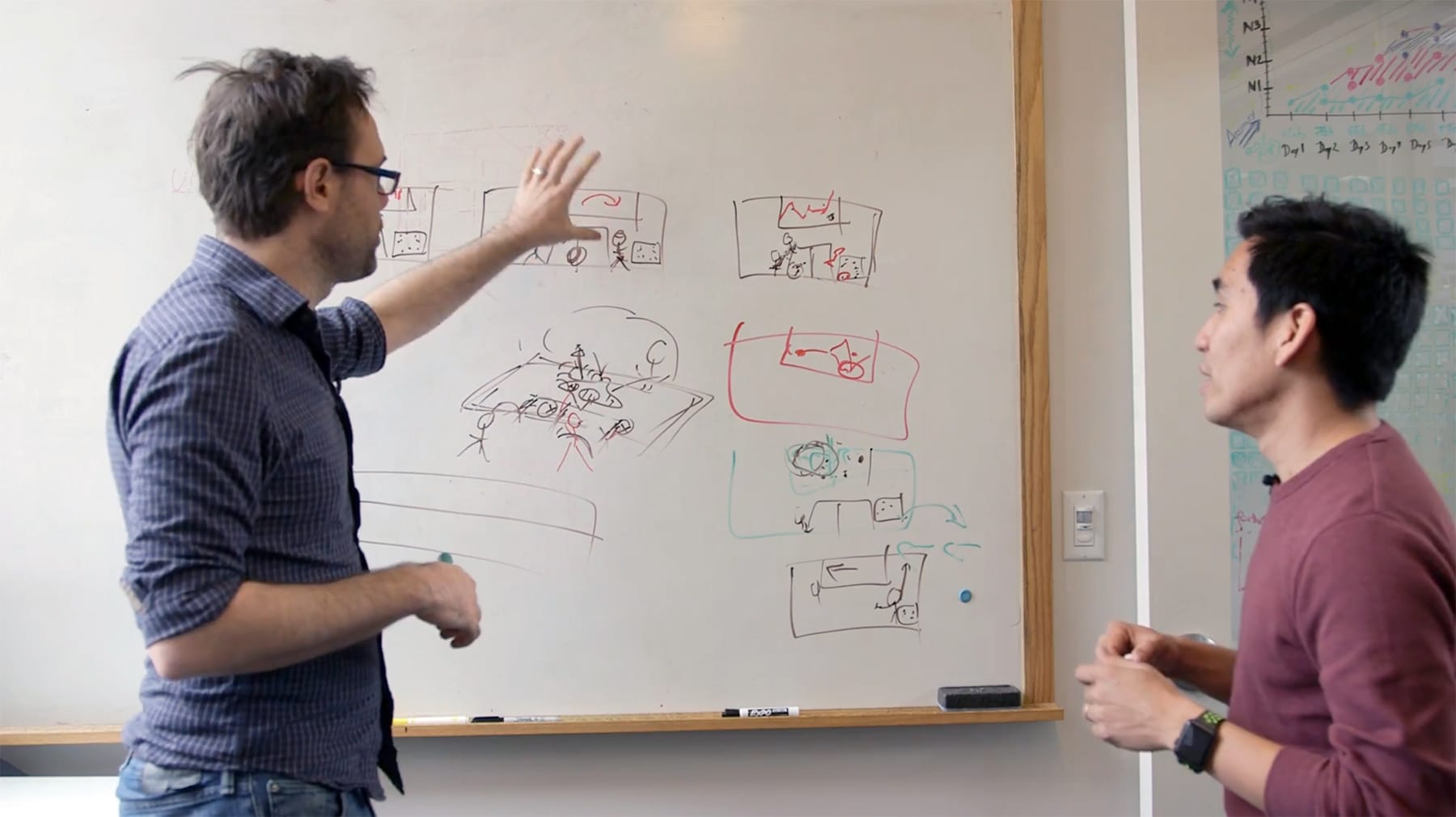

Another ethos of the lab is to focus on real-world application. During Engadget's visit, Harrison and Laput were brainstorming practical uses for an invention that can turn any flat surface into a touchscreen using a $200 LIDAR. This technology is commonly used in self-driving cars, drones and robot vacuums to scan an environment using laser, and the pair expect LIDAR prices to drop as those devices develop.

They discussed installing the sensor under a generic conference call speaker, which could allow office tables to become interactive surfaces. The table could tell how many people were gathered around it as well as allow attendees to touch the surface and move their own cursors around graphs on a PowerPoint presentation. Successfully prototyping this could form a couple of seconds in the lab's demo video of the technology, the kind they publish regularly and deliberately make understandable for a non-technical audience.

"Do you think you can code this in five hours?" said Harrison to Laput. He did, and the pantomime corporate conference took place the next morning. The project, named Surface Sight, is yet unpublished; their paper will be out by April 2019 at soonest. By that time, the lab will be working on the next thing.